Abstract

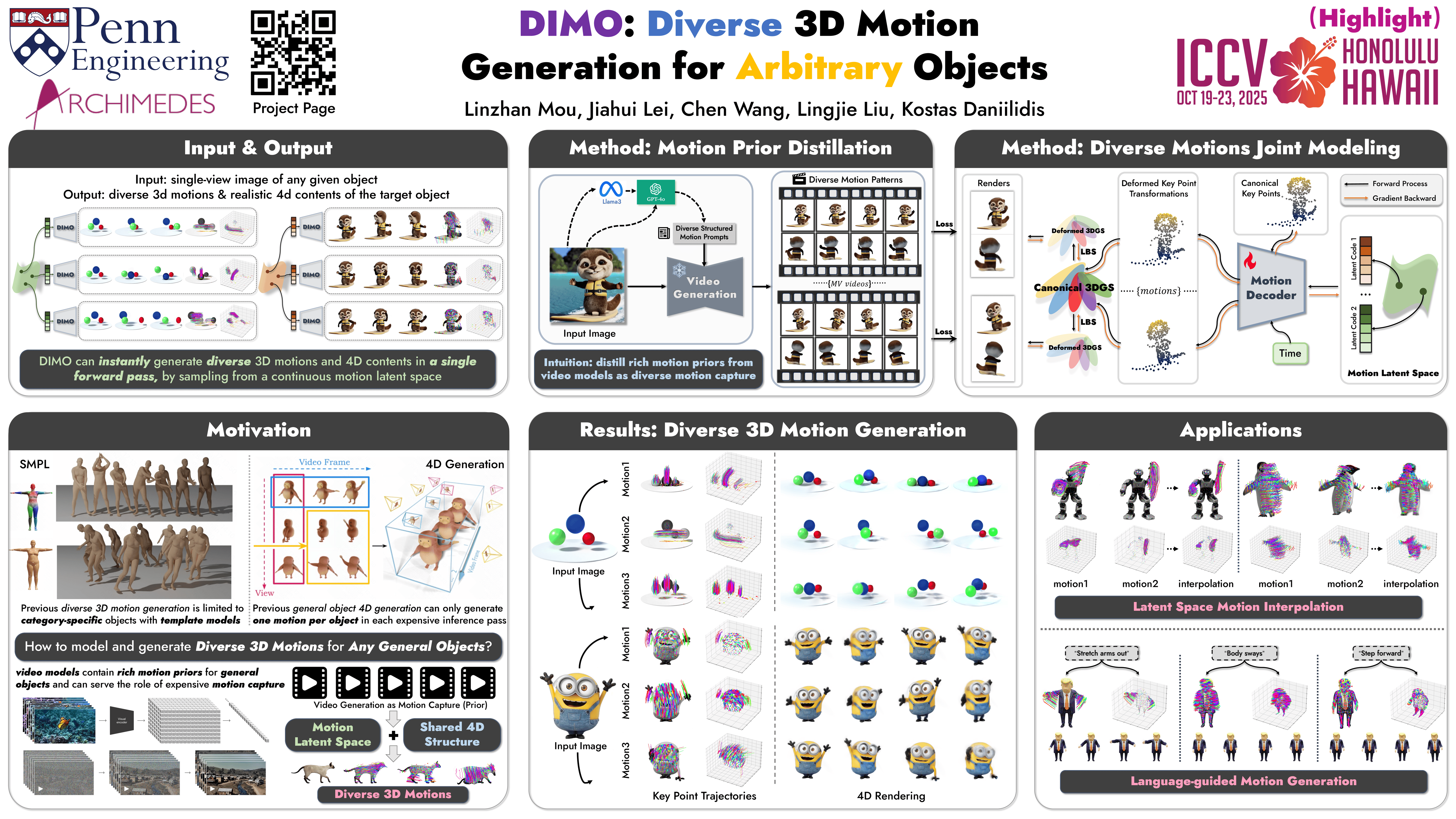

We present DIMO, a generative approach capable of generating diverse 3D motions for arbitrary objects from a single image. The core idea of our work is to leverage the rich priors in well-trained video models to extract the common motion patterns and then embed them into a shared low-dimensional latent space. Specifically, we first generate multiple videos of the same object with diverse motions. We then embed each motion into a latent vector and train a shared motion decoder to learn the distribution of motions represented by a structured and compact motion representation, i.e., neural key point trajectories. The canonical 3D Gaussians are then driven by these key points and fused to model the geometry and appearance. During inference time with learned latent space, we can instantly sample diverse 3D motions in a single-forward pass and support interesting applications including 3D motion interpolation and language-guided motion generation.

Method

Given a single-view image of any general object, DIMO first distills rich motion priors from video models. We then represent each motion as structured neural key point trajectories. During training, we embed each motion sequence into a latent code in motion latent space and jointly model diverse motion patterns using a shared motion decoder. The decoded key point transformations are used to drive canonical 3DGS for 4D optimization with only photometric losses.

Poster

Citation

@inproceedings{mou2025dimo,

title={DIMO: Diverse 3D Motion Generation for Arbitrary Objects},

author={Mou, Linzhan and Lei, Jiahui and Wang, Chen and Liu, Lingjie and Daniilidis, Kostas},

booktitle={Proceedings of the IEEE/CVF International Conference on Computer Vision},

pages={14357--14368},

year={2025}

}